“It’s been a few years since I’ve seen some nascent technology that makes you want to call your friends and say, ‘You gotta see this.’ The respected American technology journalist Casey Newton did not hide his admiration, even his amazement, in his June 10 newsletter, devoted to Dall-E.

This research project is fascinating because it is able to create an image from a simple textual description. He’s “the artificial intelligence [AI] artist who could change the world,” headlined Alex Kantrowitz, another tech newsletter writer who, like Casey Newton or a New York Times reporter, has been able to test this software, the second version of which was launched in April (the first, dating from January 2021, was less advanced).

The digital sector is passionate about this innovation created by OpenAI, a small San Francisco company at the forefront in the field of AI and supported in particular by Microsoft. The buzz is all the greater since Google announced similar software, Imagen, on May 25. In addition, Dall-E Mini – a simplified version accessible to all, launched by an independent researcher unrelated to Dall-E and hosted by Hugging Face, a company founded by three French people – offers Internet users to create crazy images. Dizzying, these advances in artificial intelligence raise many questions.

These images impress with their quality. The works of Dall E – named after the painter Dali and the robot from the science fiction film Wall E – or Imagen are often surreal and sometimes humorous. They reflect the written queries invented by “beta testers” like MM. Newton and Kantrovitz or by Google: “a prison guard rabbit”, “a shiba dog in a fireman’s suit”, “a bald eagle made of cocoa powder and mango”… After ten to twenty seconds of calculation, Dall E or Images do show a white rabbit in a nice dark uniform, a Japanese canine in a fire soldier costume, etc. They can also offer more realistic images, such as “a pastel green and white flower shop facade, with an open door and a large window.”

“Soviet Propaganda”

Dall E or Imagen also know how to imitate “styles”: “Rembrandt painting”, photography, digital art, even “afrofuturism” or “Ikea furniture assembly plan”. More rudimentary, clichés of “Dall E Mini” ape, them, “sketches of court hearing” or “Soviet propaganda”, etc. Dall E can also retouch a portion of the image and for example replace the Mona Lisa’s hairstyle with a punk cut…

How it works ? “It’s disturbing: it looks like this software is intelligent like humans, but it’s not. It’s a combination of several innovations in machine learning,” says Mark Nitzberg, of the Center for Human Compatible AI, UC Berkeley, San Francisco.

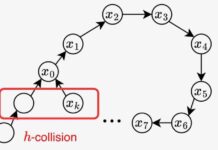

Dall E or Imagen thus use language understanding models (of which Open AI is a specialist), as well as learning without human supervision on very large amounts of data, and also the ability of software to recognize subjects in images, or a visual creation technique called “diffusion”.

Google explains that it trained Imagen in particular on LAION-400-M, a database of 400 million images associated with written captions, found on the Web. One could also wonder how much this software is inspired by training images, notes the specialist in “computer vision” at the National Institute for Research in Digital Sciences and Technologies Jean Ponce, while finding the results “impressive”. Will this raise rights issues?

This software is already generating debate. “The big question is usage: what is it for? asks Mr. Ponce. Can Dall E or Imagen replace artists? The idea seems overdone. When asked, however, Open AI believes that “AI technologies like Dall E have the potential to become tools in all areas where images are used to communicate.”

Casey Newton wonders about a “creative revolution”. For Gül Varol, researcher in computer vision and machine learning at Ecole des ponts Paris Tech, these software could be seen “a bit like an automated version of Photoshop”, the leader in image editing. Will they really make it into the graphics? In any case, they could compete with graphic designers in certain areas and become a source of cheap and easy-to-produce images, for social networks, communication or advertising, for SMEs, individuals, etc.

Potential hazards

The other, deeper question is about potential dangers: “We share the concerns about possible misuse,” says Open AI. Access to Dall E 2 was therefore limited to a few thousand testers – or 1,000 more per week. Imagen is not public. Open AI has also implemented a “moderation policy” to “prohibit the generation of violent, pornographic or political images”: the company says it has removed “explicit” content from training data, it ” “filters” certain words in user queries and “prevents” creating “photorealistic” images, including of public figures. Dall E will therefore refuse to imagine “Donald Trump killing Joe Biden”…

This precaution reflects another fear, about “misinformation”: “Generating images from text could greatly facilitate the creation of misleading images”, warns Mr. Nitzberg, imagining “fake” images of Ukrainians Nazis entering a village.

Finally, Google recognizes another “ethical challenge”, common to all machine learning models trained with “large amounts of data” from the web: “biases”, i.e. “social stereotypes” – racist, sexist… – contained in the images. So Imagen or Dall E will represent a “CEO” more like a white man, etc. “Mitigate bias is not easy,” admits Open AI, which wants to encourage “research” on the subject.

Despite these debates, the company “hopes, in the near future, to make Dall E 2 available via an interface so that developers can use it to create applications”.

Beyond that, the generation of images from text will inevitably be talked about, thinks Mr. Nitzberg: the researcher anticipates the creation of other software without safeguards and more realistic than Dall E Mini. He also imagines a logical but equally dizzying sequel: a version applied to videos. In the meantime, the LAION training database has already announced a new version at the end of May, increasing from 400 million to 5.85 billion images, fourteen times more.